Recently there has been an influx of memes showcasing how LLMs like ChatGPT cannot count the number of specific letters in certain words.

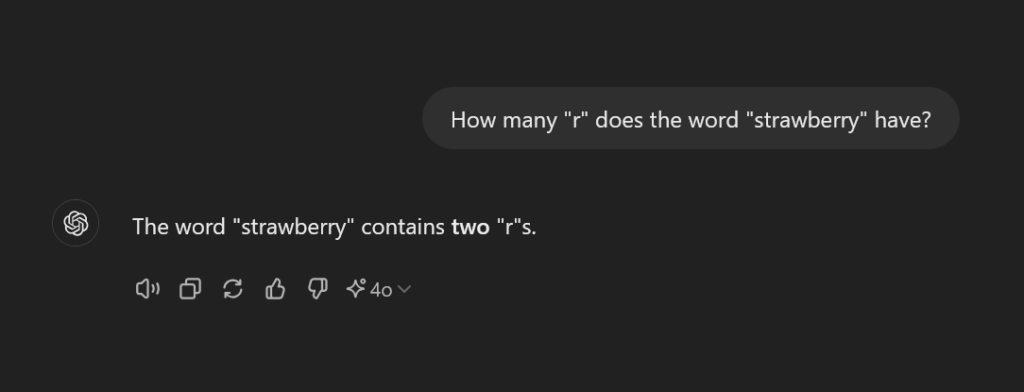

Q: How many “r” does the word “strawberry” have?

A: The word “strawberry” contains two “r”s.

The irony is that most LLMs can actually write you a great script in almost any programming language that will tell you with 100% accuracy exactly what letters are in any word and in what positions! 🤣

Then why is it so bad at counting by itself?

How LLMs Work?

Training

LLMs are trained on diverse and extensive datasets. These datasets include books, websites, and other text sources. During these trainings, LLMs “learn” to predict the next word in a sentence based on the context of the words that came before it.

Inference

When you ask a question, an LLM will generate a response by predicting the next most likely word (or sequence of words) based on the input you’ve provided. LLMs don’t “understand” in the way humans do. Instead, they generate text based on patterns that they have learnt during training.

Why the Mistake Happened?

Pattern matching

Sometimes, when processing a query, LLMs might prioritize generating a quick response that “seems correct”. This is based on common patterns, rather than carefully analyzing every detail. In this case, ChatGPT likely relied on a quick assessment of the word “strawberry” without counting the “r” letters individually.

Tokenization and Probability

The underlying mechanism might have led to a mistake in counting. The word “strawberry” might have been tokenized in a way that didn’t fully account for each letter in detail. The response was a result of a probabilistic guess that didn’t equal to the exact count.

What is Tokenization?

Tokenization is the process of breaking down a sequence of text into smaller units, called “tokens”. They can be words, sub-words, or even individual characters – depending on the context and the tokenization approach used. It is a crucial preprocessing step that helps convert text into a format that can be numerically processed by LLMs.

In this example, ChatGPT seems to have used “subword tokenization”. As a result the word “strawberry” might have been split into “straw” and “berry”. Each part was then treated as its own unit. This helps the model recognize patterns like “straw” and “berry” that are seen in other words (“straw” in “strawman,” “berry” in “blueberry”).

However, since each subword was handled separately, it could have subtly affected the precision of the letter count. Instead of analyzing “strawberry” as a whole, ChatGPT seems to have focused on the tokenized subwords, leading to a miscount of the “r”s, especially if the internal logic wasn’t careful about reassembling the entire word.

In other words, it seems like the answer was based on a quick “glance” at one part of the word (like “berry”) and not both parts combined.

Conclusion

In tasks like counting or basic arithmetic, where precision is required, LLMs can sometimes make errors because those tasks aren’t they were primarily designed to excel at. Hence, you may have to double check the result yourself, especially in situations where accuracy is critical.

Cheers!

Leave a Reply